One question we often hear is: “Why is my encoder not using all the CPU?”. To answer that question, let’s explore the reasons behind variability in CPU usage in encoders, the impact of content complexity, and why peak performance matters.

Care and attention is needed when selecting CPUs for real-time encoding. It’s also important to remember that only a handful of vendors, like us of course, have the expertise to address this topic.

CPU Usage in Encoding: Average vs. Peak

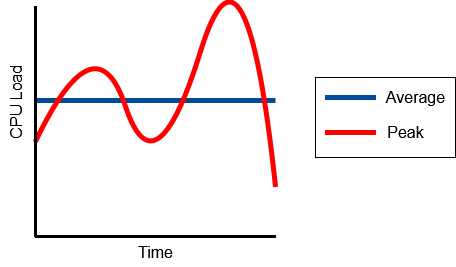

To understand why an encoder is not using all the CPU, we first need to differentiate between average and peak CPU usage. While average usage provides a general idea of how much processing power is being used over time, peak usage reflects the maximum demand during particularly complex encoding tasks.

To understand why an encoder is not using all the CPU, we first need to differentiate between average and peak CPU usage. While average usage provides a general idea of how much processing power is being used over time, peak usage reflects the maximum demand during particularly complex encoding tasks.

We are regularly asked to put a CPU usage meter or graph in our products. But this hides the complexity of the real world. A simple number or graph cannot account for the worst case CPU usage. A graph may misleadingly suggest that the CPU is not loaded, whereas in reality it is showing only part of the story as it cannot show instantaneous CPU usage.

Peak CPU is what matters – if the CPU doesn’t have the performance to meet the peak workload, the picture will stutter and glitch. Real-time video processing isn’t like a web page that can load a hundred milliseconds late with nobody noticing – a video frame that is a hundred milliseconds late will cause a noticeable stutter.

Why Your Encoder May Not Be Using All the CPU

The reason an encoder does not use all the CPU boils down to one simple concept: variability. Encoder workloads are variable and they depend on various factors which we will explain below:

A: Content Complexity

One of the main reasons for variability in encoder CPU resource usage is the complexity of the content being processed. Different levels of complexity impose varying demands on the CPU. A static scene with minimal movement will require significantly less processing power than a fast-paced action sequence with multiple elements.

That’s why, when encoding complex scenes, there are spikes in CPU usage, while simpler scenes may result in lower overall usage. But of course, the system must be designed to accommodate those worst case spikes – which only happen occasionally.

If the system didn’t have the CPU headroom to manage these peaks, there would be a loss of realtime encoding that would lead to stutters and glitches. The chances are the most complex content would be, for example, during a key moment during a sports match – having this glitch would be a disaster. Building a system that maintains realtime encoding 24/7 is a key difference between a professional encoding solution vs a webcasting solution that needs to work for a few hours.

B: Bitrate Settings

It’s extremely counterintuitive but a higher bitrate actually requires more CPU. One might think that video which is “compressed more” requires more CPU but this isn’t actually the case. Higher bitrates actually require more CPU as there is more data needing to be processed and written in high CPU functions such as entropy coding.

So, it’s important therefore to test on the worst-case bitrate that might be used with an encoder. Otherwise, testing at 10Mbps, and then a month later needing 30Mbps is a recipe for disaster – most likely a CPU was chosen that isn’t fast enough.

C: Frame rate

Frame rates are expressed in frames per second. As the units suggest, this means there are more frames to process in every second. As a result, higher frame rates require more CPU. Roughly speaking, 1080p50 content requires twice as much CPU to encode as 1080p25 content. It’s also worth bearing in mind 1080p60 content requires 20% more CPU to encode than 1080p50. This might not sound a lot, but again could be the difference between a smooth output and stuttering.

All of these factors can cause peak CPU usage to be as large as three times or more than the average. So happily watching a CPU graph and thinking there is headroom is hugely misleading.

Planning for Peak Usage

To ensure optimal performance, it’s essential to plan for peak usage scenarios—the worst-case demands. Calculating peak usage can be complicated, as it depends on the factors we mentioned above – content complexity, bitrate settings and framerate. And it’s always wise to add overhead to account for unexpected spikes.

There is no hard and fast formula for this calculation; the best estimates come from experience. That’s why, when working with a vendor, it’s crucial to trust their calculations for worst-case scenarios, even if you think they are too conservative.

In our lab, we have extremely complex test material to stress the encoder and we calculate our worst case based on this. The worst case also depends on the model of the CPU as different CPUs have different speeds and capabilities. As we have explained in previous blog posts, Intel CPUs have actually been getting slower as they struggle to compete in the marketplace.

Can You Add More Channels?

Another question we often hear is: “Can we add more channels to the system? The CPU load is low.”

While low CPU usage may suggest that you can accommodate more channels, once again, it’s important to consider the potential for peak usage. Adding channels without proper planning can lead to performance issues during high-demand periods.

I’ll use a hardware encoder then

Sadly, using hardware encoders isn’t a magic bullet. Hardware encoders also have the same issue internally – certain types of content can overstress them too. Just because they are hardware doesn’t mean they can magically process all the content in the world reliably. It’s actually worse in the sense they are black boxes so there is zero monitoring about whether this is the case until disaster – the unit crashes or glitches the output.

Conclusion

It’s normal for a CPU not to operate at maximum utilisation all the time. Complex content or other factors cause peaks in CPU usage, and servers need to be specified for worst-case content usage, not just for the average.

There are only a handful of vendors with the necessary experience to specify a hardware platform suitable for 24/7 encoding. It’s key to choose the right hardware platforms. We have extensive experience in doing this and the widest set of hardware platforms available in the market.

Learn more about how we are leaders in the transition to software-based video contribution: https://www.obe.tv/portfolio/encoding-and-decoding/